Model Context Protocol: Beyond Fragile Prompts to Secure AI Integration

The Model Context Protocol (MCP) enhances AI workflows, ensuring reliability, security, and consistency in your projects. MCP helps you build agents and complex workflows on top of LLMs.

Yes, the AI world is magical — it makes everything look easy. Until it isn't.

You write what looks like the ideal prompt. You execute it, and the AI comes back with something entirely different. Or, sometimes, something great. Perhaps you receive the perfect response once — but attempting to replicate that same result is difficult. Or worse: it inadvertently uses private customer information you never meant it to access.

Sound familiar? These aren't mere prompt engineering issues — they're core AI integration issues.

You adjust the prompt and try again. This time, it succeeded. You show it to a colleague — and it fails. You switch to a different model — and now your AI is drawing from data sources you intended to keep out of bounds, or engaging with tools it shouldn't even touch.

It begins to seem as though you're skating on thin ice with each alteration.

Each developer using large language models understands the truth: AI conduct is delicate. And once your product relies on it, that vulnerability becomes a business hazard rather than an inconvenience — it's a risk factor. It slows down development, raises expenses, produces unpredictable results, and invites security problems you never anticipated.

And here's the drawback: LLMs don't even natively have access to your files, APIs, or third-party services such as GitHub or Google. But when you begin injecting that context into prompts — via user input — it's surprisingly easy to lose control. Prompts hallucinations, context leaks, tool usage becomes brittle or unsafe.

So imagine if you could treat your whole AI setup — prompts, access controls, and third-party integrations — in the same way that you treat clean, versioned, testable code.

Imagine if it was easier to work with AI like you're working with a good API.

That's where Model Context Protocol (MCP) is needed

MCP is one of the solutions that can help make AI systems predictable and safe.

What is Model Context Protocol?

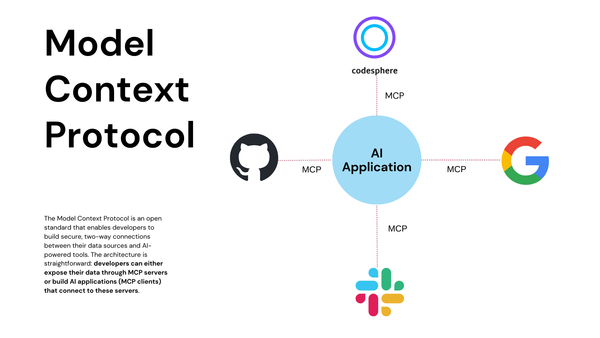

Model Context Protocol or MCP’s as they are commonly known provides a standardised way to connect AI models to different data sources and tools. MCP helps you build agents and complex workflows on top of LLMs. It acts as a bridge between your code and the data it requires, ensuring seamless integration. MCP provides a standardised way to connect AI models to different data sources, APIs, and tools and all while maintaining security and control.It acts as a universal adapter between models and tools. Uses a declarative API format so models infer how to use each tool.To use the third party tools at once is expensive and they are connected separately — cannot do things simultaneously.MCP eliminates these constraints and gives LLMs real-time data and more power.

The MCP Architecture: How It All Works

At its core, MCP follows a client-server architecture that enables secure data access and tool integration:

- MCP Hosts: Programs like Claude, your IDE, or AI tools that want to access data through MCP

- MCP Clients: Protocol clients that maintain connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardised protocol

- Data Sources: Both local files/databases on your computer and remote services that MCP servers can securely access

The diagram below illustrates the internal structure of MCP’s communication flow:

This architecture keeps your LLM running within well-defined, enforceable boundaries that only sees the data and services you've carefully specified. It's like installing a secure, well-documented API layer between your AI and everything it touches. No more unwanted data spills or raiding integrations — just secure, structured, and predictable action every time.

The AI Integration Problem — and How MCP Solves It

Let's face it: integrating AI can be like trying to domesticate a wild animal. One day, your system runs smoothly. The next? Mayhem. Your AI could suddenly:

- Produce entirely different results from the same input

- Retrieve sensitive internal documents you never intended it to read

- Try to reach out to external services without permission

- Combine data from restricted and permitted data sources

Here's a standard freeform question you could use if you were constructing an AI assistant to describe Codesphere:

You are a senior developer platform assistant. Describe what Codesphere is and how it assists developers. Be approachable and brief. Say that it integrates code, deployment, and collaboration in a single browser-based platform. Avoid technical setup information. Consider the reader to be a junior developer. Keep the response under 100 words.

This may play nicely at first — but it provides you with zero control over:

- What information the AI is able to or cannot reference

- Which services it may interact with

- How it treats private or sensitive data

- Whether the behavior remains consistent across multiple AI models

That's where Model Context Protocol (MCP) comes in — and turns everything around.

<identity>

You are DevGuide, a helpful AI assistant specialized in developer tools.

</identity>

<capabilities>

You can explain platforms, tools, and workflows in clear language.

You can adapt responses based on the user's skill level.

</capabilities>

<constraints>

Keep answers under 100 words.

Avoid technical setup or deployment commands.

</constraints>

<context>

The user is a junior developer exploring cloud-based dev environments.

</context>

<data_access>

<allowed_sources>

<source>public_documentation</source>

<source>codesphere_features</source>

</allowed_sources>

<prohibited_sources>

<source>internal_roadmap</source>

<source>customer_data</source>

</prohibited_sources>

</data_access>

<third_party_integrations>

<service name="documentation_api" access_level="read_only">

<allowed_actions>

<action>fetch_public_docs</action>

</allowed_actions>

</service>

</third_party_integrations>

<instructions>

Explain what Codesphere is.

Highlight its all-in-one approach: coding, deploying, and collaborating in the browser.

Mention Docker support briefly.

Be friendly and encouraging.

</instructions>

<!-- User Query: What is Codesphere and why would I use it? -->

Now your prompt becomes:

- ✅ Structured and modular

- ✅ Safe by design

- ✅ Explicit about what data is allowed

- ✅ Secure with integration access

- ✅ Consistent across AI models

MCP doesn’t just make prompts cleaner — it makes AI predictable, reliable, and safe.

Complete Control Over Data Access

Ever worried about your AI assistant accidentally leaking internal information or customer data? MCP addresses this head-on:

<data_access>

<allowed_sources>

<source>company_knowledge_base</source>

<source>public_documentation</source>

</allowed_sources>

<prohibited_sources>

<source>user_personal_info</source>

<source>financial_records</source>

</prohibited_sources>

</data_access>This creates clear boundaries for your AI systems, ensuring they only use permitted information sources — critical for maintaining privacy and compliance.

Secure Third-Party Integrations

Need your AI to safely interact with external services? MCP provides a framework for that too:

<third_party_integrations>

<service name="jira" access_level="read_only">

<allowed_actions>

<action>view_tickets</action>

<action>search_documentation</action>

</allowed_actions>

</service>

<service name="github" access_level="limited">

<allowed_actions>

<action>view_repositories</action>

<action>read_code</action>

</allowed_actions>

<prohibited_actions>

<action>commit_changes</action>

<action>access_secrets</action>

</prohibited_actions>

</service>

</third_party_integrations>This structured approach means you can confidently connect your AI to the tools your team already uses without worrying about unintended consequences or security breaches.

Implementing MCP with Codesphere's Public API: A Step-by-Step Guide

Now let's see how to implement Model Context Protocol with Codesphere's Public API to create a secure, structured AI assistant that can interact with Codesphere workspaces.

Step 1: Create a New Workspace and Install Packages

Go to Codesphere — You can create a workspace by navigating to the Codesphere dashboard and selecting the "Create New Workspace" option.

Step 2: Install Dependencies

Install the required packages:

pip install requests python-dotenvStep 3: Create a Configuration File

Create a .env file in the same directory with your Codesphere API credentials:

CODESPHERE_API_KEY=your_codesphere_api_key_here

CODESPHERE_TEAM_ID=your_team_id_here

ANTHROPIC_API_KEY=your_Claude_api_key_hereFor instructions on how to set up your API keys for Codesphere, visit this guide.

Step 4: Function to Get Headers for Codesphere API

This function generates the headers required to interact with the Codesphere API.

def codesphere_headers():

"""Generate headers for Codesphere API requests"""

return {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

Step 5: Fetch Workspaces from Codesphere API

This function makes a GET request to the Codesphere API to fetch all workspaces for the specified team ID.

def list_workspaces():

"""Get workspaces from Codesphere API"""

print(f"Fetching workspaces for team ID: {TEAM_ID}...")

try:

response = requests.get(

f"{API_URL}/workspaces/team/{TEAM_ID}",

headers=codesphere_headers()

)

response.raise_for_status()

workspaces = response.json()

print(f"Successfully fetched {len(workspaces)} workspaces.")

return workspaces

except Exception as e:

print(f"Error fetching workspaces: {str(e)}")

return {"error": str(e)}Step 6: Generate MCP Structured Prompt

This function generates the MCP-structured prompt that will be used to interact with the AI model (Claude). It includes context, capabilities, constraints, and instructions.

def generate_mcp_prompt(user_query, workspace_data=None):

"""Generate MCP-structured prompt for AI"""

mcp_xml = "<identity>\n"

mcp_xml += " You are CodesphereAssistant, a specialized assistant for Codesphere development platform.\n"

mcp_xml += "</identity>\n\n"

mcp_xml += "<capabilities>\n"

mcp_xml += " Explain Codesphere features and concepts\n"

mcp_xml += " Help manage workspaces through the Codesphere API\n"

mcp_xml += " Provide code examples for Codesphere projects\n"

mcp_xml += "</capabilities>\n\n"

mcp_xml += "<constraints>\n"

mcp_xml += " Do not access user's private repositories without permission\n"

mcp_xml += " Limited to read-only operations on workspaces unless explicitly authorized\n"

mcp_xml += "</constraints>\n\n"

mcp_xml += "<data_access>\n"

mcp_xml += " <allowed_sources>\n"

mcp_xml += " <source>public_documentation</source>\n"

mcp_xml += " <source>public_api_docs</source>\n"

mcp_xml += " </allowed_sources>\n"

mcp_xml += " <prohibited_sources>\n"

mcp_xml += " <source>private_customer_data</source>\n"

mcp_xml += " </prohibited_sources>\n"

mcp_xml += "</data_access>\n\n"

if workspace_data and isinstance(workspace_data, list):

mcp_xml += "<context>\n"

mcp_xml += " <workspace_data>\n"

for workspace in workspace_data:

mcp_xml += f" - {workspace['name']} (ID: {workspace['id']})\n"

mcp_xml += " </workspace_data>\n"

mcp_xml += "</context>\n\n"

mcp_xml += "<instructions>\n"

mcp_xml += f" Answer the following user query: {user_query}\n"

mcp_xml += " Provide helpful information about Codesphere.\n"

mcp_xml += " Stay within your defined capabilities and constraints.\n"

mcp_xml += "</instructions>"

return mcp_xmlStep 7: Send MCP Prompt to Claude API

This function sends the generated MCP prompt to the Claude API and gets a response.

def send_to_claude(mcp_prompt):

"""Send the MCP prompt to Claude API and get response"""

if not CLAUDE_API_KEY:

return "ERROR: Missing Claude API key."

headers = {

"anthropic-version": "2023-06-01",

"content-type": "application/json",

"x-api-key": CLAUDE_API_KEY

}

data = {

"model": "claude-3-sonnet-20240229",

"max_tokens": 1000,

"temperature": 0.7,

"messages": [

{

"role": "user",

"content": mcp_prompt

}

]

}

try:

response = requests.post(

"https://api.anthropic.com/v1/messages",

headers=headers,

json=data

)

response.raise_for_status()

result = response.json()

return result.get("content", ["Empty response"])[0]

except Exception as e:

return f"Error calling Claude API: {str(e)}"Step 8: Main Function to Tie Everything Together

This is the main flow that integrates all the functions. It fetches workspaces, generates a query for the AI, and sends the MCP prompt to Claude for a response.

import os

import requests

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

print("Environment variables loaded.")

# API configuration

API_KEY = os.getenv("CODESPHERE_API_KEY")

TEAM_ID = os.getenv("CODESPHERE_TEAM_ID")

CLAUDE_API_KEY = os.getenv("ANTHROPIC_API_KEY")

API_URL = "https://codesphere.com/api"

# Check credentials

if not API_KEY or not TEAM_ID:

print("ERROR: Missing Codesphere API credentials.")

exit(1)

else:

print("Codesphere API credentials found.")

if not CLAUDE_API_KEY:

print("WARNING: Missing Claude API key. You won't be able to send prompts to Claude.")

def codesphere_headers():

"""Generate headers for Codesphere API requests"""

return {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

def list_workspaces():

"""Get workspaces from Codesphere API"""

print(f"Fetching workspaces for team ID: {TEAM_ID}...")

try:

response = requests.get(

f"{API_URL}/workspaces/team/{TEAM_ID}",

headers=codesphere_headers()

)

response.raise_for_status()

workspaces = response.json()

print(f"Successfully fetched {len(workspaces)} workspaces.")

return workspaces

except Exception as e:

print(f"Error fetching workspaces: {str(e)}")

return {"error": str(e)}

def generate_mcp_prompt(user_query, workspace_data=None):

"""Generate MCP-structured prompt for AI"""

# Basic MCP structure

mcp_xml = "<identity>\n"

mcp_xml += " You are CodesphereAssistant, a specialized assistant for Codesphere development platform.\n"

mcp_xml += "</identity>\n\n"

mcp_xml += "<capabilities>\n"

mcp_xml += " Explain Codesphere features and concepts\n"

mcp_xml += " Help manage workspaces through the Codesphere API\n"

mcp_xml += " Provide code examples for Codesphere projects\n"

mcp_xml += "</capabilities>\n\n"

mcp_xml += "<constraints>\n"

mcp_xml += " Do not access user's private repositories without permission\n"

mcp_xml += " Limited to read-only operations on workspaces unless explicitly authorized\n"

mcp_xml += "</constraints>\n\n"

mcp_xml += "<data_access>\n"

mcp_xml += " <allowed_sources>\n"

mcp_xml += " <source>public_documentation</source>\n"

mcp_xml += " <source>public_api_docs</source>\n"

mcp_xml += " </allowed_sources>\n"

mcp_xml += " <prohibited_sources>\n"

mcp_xml += " <source>private_customer_data</source>\n"

mcp_xml += " </prohibited_sources>\n"

mcp_xml += "</data_access>\n\n"

# Add workspace data if available

if workspace_data and isinstance(workspace_data, list):

mcp_xml += "<context>\n"

mcp_xml += " <workspace_data>\n"

for workspace in workspace_data:

if isinstance(workspace, dict) and 'name' in workspace and 'id' in workspace:

mcp_xml += f" - {workspace['name']} (ID: {workspace['id']})\n"

mcp_xml += " </workspace_data>\n"

mcp_xml += "</context>\n\n"

mcp_xml += "<instructions>\n"

mcp_xml += f" Answer the following user query: {user_query}\n"

mcp_xml += " Provide helpful information about Codesphere.\n"

mcp_xml += " Stay within your defined capabilities and constraints.\n"

mcp_xml += "</instructions>"

return mcp_xml

def send_to_claude(mcp_prompt):

"""Send the MCP prompt to Claude API and get response"""

if not CLAUDE_API_KEY:

return "ERROR: Missing Claude API key."

headers = {

"anthropic-version": "2023-06-01",

"content-type": "application/json",

"x-api-key": CLAUDE_API_KEY

}

# Updated request format for the current Claude API

data = {

"model": "claude-3-sonnet-20240229",

"max_tokens": 1000,

"temperature": 0.7,

"messages": [

{

"role": "user",

"content": mcp_prompt

}

]

}

print("Sending prompt to Claude API...")

try:

response = requests.post(

"https://api.anthropic.com/v1/messages",

headers=headers,

json=data

)

response.raise_for_status()

result = response.json()

# Extract content from the response

if "content" in result and len(result["content"]) > 0:

return result["content"][0]["text"]

return "Empty response from Claude API"

except Exception as e:

print(f"Full error details: {str(e)}")

if hasattr(e, 'response') and e.response is not None:

print(f"Response content: {e.response.text}")

return f"Error calling Claude API: {str(e)}"

def main():

"""Main execution flow"""

print("=== Model Context Protocol with Codesphere API Example ===")

# Step 1: Get workspaces from Codesphere

workspaces = list_workspaces()

if isinstance(workspaces, dict) and "error" in workspaces:

print(f"Failed to fetch workspaces: {workspaces['error']}")

return

# Step 2: Display workspace information

print("\nWorkspace information:")

for workspace in workspaces:

print(f"- {workspace.get('name', 'Unknown')} (ID: {workspace.get('id', 'Unknown')})")

# Step 3: Get user query

user_query = input("\nEnter your question about these workspaces: ")

if not user_query:

user_query = "What are my available workspaces and what can I do with them?"

print(f"Using default query: {user_query}")

# Step 4: Generate MCP prompt

print("\nGenerating MCP-structured prompt...")

mcp_prompt = generate_mcp_prompt(user_query, workspaces)

print("\n===== GENERATED MCP PROMPT =====")

print(mcp_prompt)

print("===============================")

# Step 5: Send to Claude if API key is available

if CLAUDE_API_KEY:

print("\nSending to Claude API...")

claude_response = send_to_claude(mcp_prompt)

print("\n===== CLAUDE'S RESPONSE =====")

print(claude_response)

print("============================")

else:

print("\nSkipping Claude API call (no API key provided).")

print("To get AI responses, add your ANTHROPIC_API_KEY to the .env file.")

if __name__ ==Step 9: Running Your MCP Implementation

Simply run your Python script:python main.py This will:

- Fetch your Codesphere workspaces using the Codesphere API.

- Generate an MCP-structured prompt based on your workspaces.

- Send the prompt to the Claude API for processing.

- Display the response from Claude, providing insights or answers based on your query.

Limitations to Keep in Mind

Although MCP brings a lot of structure and clarity, it’s not without trade-offs. Here are a few things to keep in mind before jumping in:

- Setup Overhead – You’ll need to define your servers, clients, and host behaviors upfront.

- Still Evolving – Community support and tools are early-stage compared to more mature frameworks.

- Not Always Necessary – For quick experiments or one-off tools, MCP might be more than you need.

Conclusion:

MCP won't fix all of your problems, and that's sort of the idea. It's not attempting to be a framework, a library, or some monolithic solution. Rather, it provides you with a simple, protocol-based mechanism for connecting tools, specifying access, and managing your LLMs or automations.

It excels when you've got complex stacks going on — multiple services, bespoke APIs, private data — and you need a reliable mechanism for plugging them all together without exposing the entire data.

If you’re building beyond just one-off scripts and want something that scales in clarity and security, MCP is worth exploring. Think of it as adding a little architecture to your hustle — just enough to make things feel solid.